Porting wifibroadcast to Android

This post shows my approach on getting my FPV wifi transmission system to run on an Android device

—

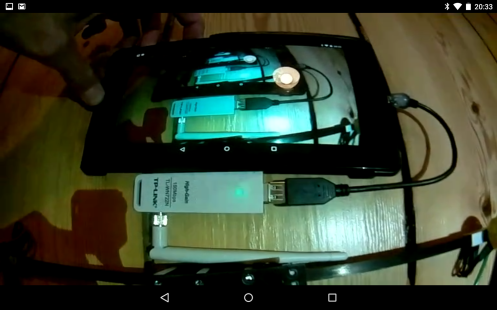

Motivation sometimes comes through strange ways. In this case it was my laptop’s battery that died completely. I just had finished my quad and one week later my laptop was bound to the wall due to the failed battery. Thus I could not fly anymore since then 😦 That was the perfect excuse to port the wifibroadcast stuff over to Android. To be honest: I wouldn’t want to do this a second time. It was quite painful. I can’t give give you exact reproduction steps since this is higly device dependend. But you should gather enough info to redo the stuff for your device (I used a Nexus 7 2013 running Android 5). You’ll find a screenshot from the android device here (so the system has more or less photographed itself. How I love these recursive worm holes :):

This project needed to archive the following sub-goals:

* Root the device (many manuals available on how to do that)

* Support TL-WN722N wifi card in android kernel

* Cross-compile wifibroadcast for android

* Decode of raw h264 streams

* Displaying video data

TL-WN722N wifi card kernel support

Although most modern Android devices support host mode over USB OTG the number of activated USB drivers in the vanilla kernels is quite low. You can be pretty sure that there will be no USB wifi drivers enabled. Thus there is no way around a custom kernel build. This page has a detailed description on how to build your own kernel for the official “Google” android. It seems as if kernel compilation for Cyanogenmod is a bit more complicated. That’s why I went this way. Using “make menuconfig” to enable the driver “ath_htc” for the TL-WN722N is all there is to do. After installing the new kernel in your device you also need the firmware for the card. Note that you probably can’t use the firmware from your Ubuntu machine (or similar) because that probably uses the newest v1.4 firmware. The android kernel only supports v1.3 which you can get here. To copy it to the right location enter on the android shell (root required):

#make system writeable mount -o rw,remount /system cp /sdcard/htc_9271.fw /system/etc/firmware

Now your wifi card should run. Note that the card is by default “down”. So you won’t notice anything when you plug it in. Look into dmesg and see if everything went well. You can safely ignore error messages in dmesg about the “ASSERT in hdd_netdev_notifier_call”. That originates from some unclean qualcom wifi drivers for host mode…

Running wifibroadcast on Android

Now that the wifi card runs, how do we use it with wifibroadcast? Well, I was lazy and did not bother to port it over to Android. Instead I used a Ubuntu chroot environment where I could easily build and run it like I would be on a real linux machine. Again, you find a lot of pages describing how to create an “Ubuntu in a file”. I won’t repeat these steps here. Just the two lines which get me from the android shell into the system:

#this mounts the ubuntu image into the directory "ubuntu" mount -o loop -t ext4 ubuntu.img ubuntu #this chroots into the system unset LD_PRELOAD export USER=root export HOME=/root chroot ubuntu /bin/bash

Now you have a full powered ubuntu system available on your android device 🙂 In there you can do the usual things:

apt-get install mercurial hg clone https://bitbucket.org/befi/wifibroadcast cd wifibroadcast make

And then you have a usable wifibroadcast. Inside the ubuntu system you can then setup your wifi card:

ifconfig wlan1 down iw dev wlan1 set monitor otherbss fcsfail ifconfig wlan1 up iwconfig wlan1 channel 13

Decode and display video

Remember, the rx program will receive raw h264 frames that need to be decoded and displayed. My first attempt was to install the “XServer XSDL” app (excellept app by the way) which gives your chroot system graphical output capabilities. From that I just used the standard ubuntu gstreamer tools to decode and display the video. Unfortunately this was a bit too slow and had a latency of around 500ms (too much to be usable). I also tried some of the video streaming apps from the Play store but I found that none of them was suitable. So I had to write my own… 😦

I wrote an app that opens a UDP socket on port 5000 for receiving raw h264 data. The incoming data is parsed for “access units” and these are forwarded into a MediaCodec instance. The decoded frames are then directly rendered onto a Surface object. You can grab the source code of it here:

hg clone https://bitbucket.org/befi/h264viewer

This repository contains an “Android Studio” project. Use “import project” to open it. It targets Android API 21 (aka 5 Lollipop). There is also a ready-to-install .apk under “app/build/outputs/apk/app-debug.apk”

How to use that program? Very simple: Just send raw h264 data over UDP to port 5000 of your android device and it gets displayed. If your Raspi is in the same network as your android device you could use something like this:

raspivid -t 0 -w 1280 -h 720 -fps 30 -b 3000000 -n -pf baseline -o - | socat -b 1024 - UDP4-DATAGRAM:192.168.1.100:5000

where 192.168.1.100 is the IP address of your android device. Why have I used “socat” instead of “netcat”? Well, netcat is a line-based tool. Since we are sending binary data it usually takes some data until something similar to a newline is found (netcat triggers the packet transmission on this condition). Most often this will be more data than the maximum packet size. Therefore, netcat packets are usually fragmented IP packets (something you should avoid). Socat just sends unfragmented packets when the threshold (in this case 1024bytes) is reached.

How to use that with wifibroadcast? Again, very simple. On the raspberry execute the usual command to broadcast your video into the air:

raspivid -t 0 -w 1280 -h 720 -fps 30 -b 3000000 -n -pf baseline -o - | sudo ./tx -r 3 -b 8 wlan1

Inside the chrooted Ubuntu on your android device execute:

./rx -b 8 wlan1 | socat -b 1024 - UDP4-DATAGRAM:localhost:5000

All this does is to forward the packets from “rx” to the UDP port 5000 of your device. And voilà, you should see your camera’s image.

Summary

The latency is ok and should be usable. My PC is still a little bit faster but that is not too surprising. I have some ideas on how to optimize everything and keep you posted. Concerning battery life I was quite surprised. The app and wifi dongle drained the battery 14% after one hour of runtime. So I’m quite confident that this would allow me to at least stream for 5 hours with a full battery.

Another alternative I’m thinking about is to use a Raspberry with a HDMI display to show the video stream. I’ve fiddled around a bit and maybe in the next post I’ll present my OMX pipeline that does the decoding and displaying of the video on the Raspberry’s GPU (thus the CPU idles). I was actually surprised that this gave me even lower latency compared to a gstreamer pipeline running on my PC. The downside is that this is a bit more complicated hardware-wise. raspi+display+display-controller+wifi-card+battery+5v-dc-dc-converter could get a bit messy… we’ll see 🙂

How much lower was the latency using the PI for decoding? I can currently get an average of 140ms decoding on my laptop using gstreamer/UDP but would love to be able to get less if possible.

Comparing the transport over wifibroadcast with plain UDP should not change much latencywise. And PC vs PI is currently unknown. I haven’t measured it. They are pretty close together with maybe a tiny advantage on the PI. By adapting the h264 parameters you could gain some ms. https://sparkyflight.wordpress.com/ has tested a lot of variations.

I read about your android port,and maybe you have tried what im describe as follow

In the rx part i would like to use the stream in oF (c++ framework), i tried direct stdin method and a gstreamer pipeline to read the video. This works but i get 1frame per minute or less,i think that stdin its not threaded so its locking the app.

Its possible in the RX part to create a stream in local and capture the stream in the same computer with another app, i need to be able to apply some shaders to the stream in order to get barrel and chromatic distorsion correction in oculus rift.

Have you tried something similar in the android port? Does it increase the latency?

hi befi,

are you planning on further development on android as an rx-device for wifibroadcast?

i got it running on an nexus 7 2012 (http://fpv-community.de/showthread.php?64819-FPV-Wifi-Broadcasting-HD-Video-Thread-zum-Raspberry-HD-Videolink-fon-Befi&p=867402&viewfull=1#post867402)

but using it is quite uncomfortable cause of the chroot to ubuntu. having your rx-programm compiled for native android would be great together with gstreamer for android.

do you think, system would improve regarding latency having it all inside android with hopefully a shorter pipeline? my experience right now is, a raspi has lower latency than my nexus 7, although it has a lot less cpu power.(ok, nexus 7 2012 isn’t bleeding edge any more)

for example a lg g3 would be a nice device with high resolution for using it inside goggles.

and last not least, thanks a lot for your brilliant work

hi tomm

wow, as far as I know you are the only other person that also went down that painful road 😉 did you use my app for displaying the video?

because android is such a pain to develop on i stopped completely working on it. although i totally agree that an android device would be a perfect receiving device.

making thinks “play store ready” would require a lot (!) of work. one would need to

1) Provide a driver for a USB wifi card. This should be technically possible by using the Android Host API (https://developer.android.com/guide/topics/connectivity/usb/host.html ) and port the linux driver. But this would take weeks, maybe even months…

2) Having the driver ready, the port of wifibroadcast to java or NDK should be easy to do (compared to the driver).

3) The problem of decoding and displaying the video is very simple and already solved in my app.

concerning latency, i think this would not change much. most part of the latency comes from the h264 decoder and this cannot be tweaked much. unfortunately.

well, you showed the way, so it wasn’t too painful 😉 just a lot of reading about android kernel compilation, but there is plenty of information on that. especially for nexus devices, it’s not that hard, toolchains and kernelsources, everything is available. the harder part for me was a working chroot, i tried too many different ways and messed up my tablet with different busybox installs. i discovered sshdroid too late, till then i used adb shell over usb and that was really painful.

finally, i hoped some of the sophisticated guys would jump on it, but no luck so far 😦

and a “play store ready” would be nice, but i think not mandatory necessary. people who are dealing with that kind of stuff are at least a bit “techi”

rooting a device and changing the kernel is doable, so the driver part should be ok.

still, you would have to compile a kernel for every different device, but i’m sure people would agree on not too many different devices which are suitable.

so for me, an android phone is still the perfect device for receiving. costs for a raspi + high resolution (touch) display + power supply isn’t much lower than a last years phone.

so the missing link for me is the android software part.

and of course i used your android app for displaying, it worked out of the box 😉

During our discussion an idea for a shortcut to a “play store ready” app popped out in my mind. Instead of reimplementing the wifi driver for android maybe it would be possible to use the card without exactly knowing what we are doing?

I used wireshark to monitor the USB traffic coming from the WN722 card during monitor mode. All packets were received in a singe transfer, it should be fairly easy to extract the wifibroadcast packets using the android host api. The bigger problem is: How to get the wifi card on android into monitor mode without a driver? I experimented a bit with the usbrevue tools (https://github.com/wcooley/usbrevue ) and captured all the writes the linux driver issues when plugging the card in. The firmware load could be easily spotted right at the beginning. However, plain replaying of the data back into the device (with an unloaded kernel driver of course) did not work, sadly. This would be extremely simple: Capture all the writes from a proper kernel driver and replay this dump on an android device without really understanding what’s going on. Mh… any help would be appreciated 🙂

i would help, but i have no idea how 😦

i still think, changing kernels on an android device is doable for non-programmers. at least, i managed to, and this is kind of a proof 🙂

and i’m using 2,3 ghz and not all people want to use the same channel, and if i understood your described way, than we still would need different dumps for different channels etc.

speaking of android kernels again: i already testet, if i could compile a kernel for a newer device. as already mentioned the lg g3 looks interesting to me. this time i used sources from xda-developer and a sabermod toolchain. the hardest thing was finding a working toolchain: newest is not always best. 😉 reconfigure kernel, apply 2,3 ghz patches and compiling was straíght forward. just could not try it out, i do not own a lg g3.

buying one makes only sense to me, if someone or several people a willing to jump on that train.

i don’t have the skills for porting your rx to android nor programming a touch enabled gui for channel-switching, gst-pipeline configuration etc.

so i still hope ….

Wouldn’t it be a possibility to Receive the Data with the Raspberry Pi and then transmit them over USB to the Android Smartphone? So it’s no driver needed and a “simple” app would do the Job.

Maybe it’s even possible to still use the Raspberry to decode and send the decoded Data to the USB Port.

Mh, I never followed that thought because I was always looking for a software-only solution. But the more I think about your idea, the more I like it.

Sending the video data uncompressed seems unfeasible. The data rate explodes right after the decompression. But forwarding the compressed data should be no big deal. And there it might even be possible to use normal wifi. This would give you a true wireless experience like the fatsharks. Leave all the heavy raspi, battery and n-times diversity antenna setup on the ground and connect your phone to the receiver via wifi. This should not be an issue since at that short distance it is almost for sure to have a good connection. And just a simple transmission of the data shouldn’t add more that 10ms latency… mh, this sounds really good.

Unfortunately I am quite busy in the next weeks/months but maybe someone else could try it out? One would just have to use my “Porting wifibroadcast to Android” guide and replace the chroot Linux with a Raspi that is connected to the Android device over wifi. Damn, I really would like to try that right now 🙂

And it would even be possible to connect more than one device at the same time. They just need your app and can watch my flight.

Unfortunately my coding skills are far away from realizing it..

I will try it with chroot in the first shot to try everything out, and until then there is maybe a solution with a Raspberry access point 🙂

hi befi, don’t you think, we will run into problems with the 2.4 ghz transmitter nearby?

or do you think, it doesn’t matter, cause of the short distance? my wlan at home drops performance, as soon as i switch on my 9x. or are you talking about 5ghz wlan for the local connection.

however, it would be excellent, if it’s going to work that way. 🙂

yes, i was thinking of 5ghz wifi or 2.4ghz on a different channel (if your RC allows you to do so. For example the HOTT mod I described in my blog would not work because it occupies the lower half of the spectrum)

Isn’t the TX code all we are looking for? The only difference is, that the stream don’t come from the cam but from the RX program.

It sounds very simple to realize, but Im sure it only sounds like ;D

I just got it working 🙂

The Raspberry starts an AP with a CSL300 Wifi Stick and streams the video with socat to my Android device (LG g3). But unfortunately it seems, that its too much traffic for the usb controller.

I receive only a lot of pixels, no real picture, and also the packet loss raises extremely as soon as there is any traffic on the AP (even when I’m logging in and receiving the IP-Address, you can see a lot of packet losses).

Any suggestions for solving the problem?

I’m using 2 WN722N for receiving the stream and 1 CSL 300 for the AP right now. The AP is working at 5Ghz.

What type of computer are you using? Raspberry 1 or 2?

Raspberry Pi 1 B

Will try Raspberry 2B soon, hopefully it’s a bit faster

With RasPi 2 it just works like a charme 🙂

I had to change the receiving wn722n to mcs1, otherwise it wasn’t usable.

I testet it with only 2 wn722n, the goal is 4. Have to see, what the usb traffic says..

Next step is to view it side by side.. But I’ve never programmed anything for android..

Have to correct me, the problem was the socat pipe I used.

I tried to broadcast it, to be able to receive it with multiple devices. The command was:

socat -b 1024 – UDP4-DATAGRAM:192.168.0.255:5000,broadcast

Unfortunately, this doesn’t seem to work properly.

I have to use the IP Adress of my Android Device:

socat -b 1024 – UDP4-DATAGRAM:192.168.0.151:5000

So it doesn’t seem to have problems with the traffic on the usb bus.

Hello,

I am always loocking for ways to reduce the latency of h.264 streaming.

Lately, I stumbled over this site http://moonlight-stream.com .

They do basically the same like we do for fpv, streaming h.264 video to your android device, but in purpose of game Streaming. As they claim to get latency well below 100ms on Android nVidia Shield f.e, i got motivated and decided to take a look at mediaCodec usw. So I felt free to develope your app further, modified it a bit to reduce latency, and added a side by side mode using openGL.

At the time i’m getting 170ms end to end at 720p 30fps on my Huawei Ascend p7, which is pretty good for the slow mali450mp gpu,and i hope to be able to reduce it using the “moonlight hacks” (they seem to manipulate the h.264 data hardware-decoder dependent).

Why I’m using Android: with any VR Googles kit (like cardboard) my smartphone is the cheapest and highest resolution video googles xD

And here is my source code: https://github.com/Consti10/myMediaCodecPlayer-for-FPV

Hi Constantin

Wow, that is really great! Finally someone takes care of my App! As you most likely have noticed it is full of flaws and bugs. It was an afternoon project and the first Android App I wrote, which easily explains its quality 🙂

I will shortly try your app.

Concerning latency: Did you compare your app with the original app? I never measured it’s latency but always felt it was quite a bit slower than RPI or my laptop. That is also the main reason why the development has stalled. If you could measure the latency of my App with the same method it would be a great way to quantify your improvements.

If you could bring the latency down to a laptop-comparable figure, then that would be really great news. I absolutely share your enthusiasm of using a smartphone as display device. However, there were always the two “issues” in the way of transforming a smartphone into a killer FPV device: Latency and missing wifibroadcast on Android.

You seem to be on the good way with point 1) but how to you take care of point 2)? Using a rooted device with custom kernel as I did or do you forward the video data from a raspberry pi over normal wifi to the phone?

A third option which would be perfect is to use the wifi hardware directly connected to the phone with the Android USB host api. But this is obviously a lot of work since you would need to reimplement the whole driver in java. I once tried an easier approach: I logged all the USB transactions the kernel sends to the TL-WN722N from plugging it in until it is in monitor mode (so this includes loading the firmware and configuring the device). After that the received packets are very easy to receive just by a single USB transaction per packet. Unfortunately, I could not get the card to run by replaying the captured init data… I did not analyze the cause so I think this is still a way one could go. Someone who know a bit more about USB should be able to do this…

Another thing I’ve found lately is that there is a userspace pcap App for Android: https://www.kismetwireless.net/android-pcap/ . In this app someone went through the trouble of actually porting the kernel driver of RTL8187 chips to java… quite impressive. Unfortunately, this is the only chip that is currently supported. But in theory it should work as a RX device.

I have a RTL8187 card somewhere in my attic. When I have the time I will try out the pcap App. If it captures the data as expected it should be straight forward to merge the pcap app with wifibroadcast and the android display app. This way you would just get the App from the Play store, plug the WIFI card in and you are done 🙂

Please keep us updated here about your developments!

For a afternoon Projekt it’s pretty good 🙂 I don’t have a lot of experience in Android,too.

1) Unfortunately i had to do some small changes first to get it running on my device (Android 4.4 kitkat),so I can’t compare it directely. But what definitely decreased latency on my device was, that dequeueOutputBuffer now runs on a seperate thread,pulling the videoFrames as fast as possible out of the decoder .This gave me (tested) ~-40ms on my device, which brings up another point: The latency overall , and the improvements that can be made, seem to be very dependent on the gpu manufacturer. F.e: on my mali 450mp gpu a higher fps actually increases ! latency , which they actually mentioned somewhere in the moonlight code, or Intel devices need some fixups to enter low latency paths. In moonlight source Code they really have a list with the most common encoders,and which hacks they need, but i can’t test it on any other device than mine, so a lot of fixup have to be made for each device (and i don’t have the skills&time to do that ).

Point b) pretty simple, i have my smartphone connected per usb with the ground pi, make a hotspot (usb tethering) and pipe the rx data with socat to my phone. Works very well xD

I tested your app and I must say that I am quite impressed. I measured the latency to be somewhere around 130ms. My PC is still a dip better at 115ms but I think 130ms is a very good figure (Test device: Nexus 7 2013 running Android 6). Now we have a decent display device with low latency – quite a game changer.

I tested the RTL8187 card with wifibroadcast (ALFA AWUS36H) but I could not get it to work. It kind of worked but it dropped too many packets. The video was there but it was unusable. So the approach of using the Android PCAP with this card is not an option 😦 So I guess I’ll stick with my custom kernel and chroot linux. I prefer this over the PI gateway since it reduces cabling.

Hi,

I’m glad to hear it works on your device, even with better latency 🙂 I need a new Smartphone…

First,i did a lot to measure the bottlenecks of the app,and here are the results:

1) about sps/pps data for : Because I couldn’t find clear information about h.264 Nalu header usw. (and the official documentation is really painfull to read) i analyzed the byte data coming from raspicam. I wrote some info about how to filter sps/pps data from the rpi stream into the source code to make the way free for eventual fixups.

2) I added a simple way to measure the latency from the hw decoder. When an input Buffer get’s filled, i put the current time in ms into it,and when i retreive an outputBuffer I can retreive this timestamp &compute the time the decoder needed.

And here’s where the fun starts: the decoder needs (average)

1) ~98ms when using SurfaceView for output

2) ~53ms when using TextureView for output

3) ~53ms when using OpenGl for output.

But, in contrast,i measured the latency overall (filming a stoppwatch) following:

1)~170ms using SurfaceView

2)~170ms using TextureView

3)~200ms using OpenGl (seems like openGl adds some overhead,unfortunately)

The weird thing is a) why does OpenGl need a shorter time for decoding,but a longer time to get displayed and b) WTF ! 55ms for decoding,but where comes the rest of overhead ? At the time I’m really confused,but there should be a lot of clearence left for improving latency.

Second: what raspivid Parameters did you use on your nexus ?

I really like the idea of plug&play wifi stick on android; eather way I always use some “ground station” .

Happy new Year !

Consti

Hi

Your latency numbers are a bit contradictory. Strange… Did you take several measurements with the stopwatch? Assuming that L is the actual latency and T is the time between two frames, the stopwatch method can measure everything between L and L+T (L if you capture the image right after it has been displayed on the screen and L+T if you capture right before the screen is updated). That might explain some strange numbers but possibly not all.

I used 6mbps, 48fsp, -g 48, everything else default values.

Since you dived deep into the MediaCodec: Could you make a guess on how much work it would be to realize the TX side using Android devices? This should give us many advantages:

– Cost: Many people have an unused Android device somewhere. Also, you can get cheap new Androids that are close to the price of Raspberry+SDcard+Camera

– Size: Many phones have a motherboard that’s smaller than a Raspberry zero. My Galaxy Nexus would be such a candidate. If you strip it from everything and get it somehow to autostart then you would have a very tiny device.

– Camera variety: Currently, the only usable sensor is the default Raspberry camera’s sensor. If we could get Androids to do the same thing, users could choose between several sensors

– Possibly latency: Much of the latency of the Raspberry system is created at the TX Raspberry (refer to https://befinitiv.wordpress.com/2015/09/10/latency-analysis-of-the-raspberry-camera/ ). Although it is not clear if Androids are better than a PI there is still a good chance to find at least /some/ phones that are better. After all, the MediaCodec backend differs a lot from vendor to vendor.

Yeah !

I found a way how to reduce the overhead of ~30ms when using openGl instead of surface/texture View.

The solution: calling glUpdatTexImage() and glFinish multiple times in onDrawFrame(). I assume that https://www.khronos.org/registry/gles/extensions/OES/OES_EGL_image_external.txt android buffers about one frame with this extension,even when using “singleBuffermode”.

But i’m not sure if that is needed on all devices – i think it could add some overhead,too. That’s why i didn’t put it into the github apk at the Time.

Hey,

Just upgraded my phone to android 5.1 .

And things started to change xD

First: now the approach higher fps- lower latency works on my device,too; at 49fps 720p I’m now have between 100-160ms , average 130ms latency,which is awesome !

I can’t say if my chip producer included some improvements on the gpu in this android release,too. But from now I will definitely stick to 5.1 ,especially as Android adds some features specific for MediaCodec,too (f.e asynchronous callbacks).

I can’t say for sure,but (on my device) TextureView and OpenGl (which-i think,don’t know exactely,both use the external-texture-extension ) surprisingly perform better than SurfaceView. In fact,surfaceView shows artefacts on fps >30 and openGl doesn’t.

I can’t wait to try it out in the field using my vr googles !

Since this conservation is getting bigger,are you active on any forum ? I use the same like mentioned by tomm,but it’s german.

Yeah, i’m filming a blurry timer on my pc at 30fps,that’s not the best way,but i always compare sequences of ~10frames to get a latency number.

However,android 5.1 seems to make this testing senseless,since it performs better & different.

Android as rx: yes,that’s definitely worth some thinking.

I would really like to have some better camera (the rpi cam isn’t bad,but) – it’s 2years old ! There don’t seem to be any other mini pc’s available on the market. (Imx freescale is the only dependant where it probably doesn’t need a team of hardware & software engineer to get a camera running) because they almost all use the same api’s ,but none manufacturer releases them for public/open source.

But on any android device these api’s are included by the manufacturer in stagefright, and can be acessed indirect by MediaCodec.

So,in cost of giving away some controll over the dataflow , i think android is a good way to go – especially,as MediaCodec is pretty easy to understand.

Thanks a lot, both of you! I was having problem seeing the image in the field becouse of the low brightness of my notebook, but streaming to my cell will solve this.

Constantin, I was, for curiosity, trying to mirror my pc screen to my cell, using your android app, and the command lines in your GitHub page, but none of this works for me. Here is the output:

robertito@ubuntook:~/Documentos/FPV/wifibroadcast$ avconv -f x11grab -r 120 -s 800×600 -c libx264 -preset ultrafast -tune zerolatency -threads 0 -f h264 -bf 0 -refs 120 -b 2000000 -maxrate 3000 -bufsize 100 -| socat -b 1024 – UDP4-SENDTO:192.168.4.62:5000

avconv version 9.18-6:9.18-0ubuntu0.14.04.1+fdkaac, Copyright (c) 2000-2014 the Libav developers

built on Apr 10 2015 23:18:58 with gcc 4.8 (Ubuntu 4.8.2-19ubuntu1)

Output #0, h264, to ‘pipe:’:

Output file #0 does not contain any stream

Do you have an idea of what can be causing that problem?

Thanks a lot!

Hey,

Haven’t heard something for a long time here. But developing didn’t stop,and I added a lot of settings, to improve usability and compability on different devices (though,some chipsets, unfortunately,seem not to work).

I have to improve my measurements reagarding latency: 1) you can find a latency file in the settings, where you can measure it for your device –

2) the decoder actually (for me) takes less than 10ms, the rest of the lag is on the way the pixels have to go until they appear on the screen.

as a conclusion, I would say – as long,as the decoder doesn’t buffer frames, the app has exactely 16ms more latency than the rpi, and – as a non-broadcom-engineerer – i can’t improve this further.

But,and I am actually very proud of this, – http://fpv-community.de/showthread.php?64819-FPV-Wifi-Broadcasting-HD-Video-Thread-zum-Raspberry-HD-Videolink-fon-Befi/page193 there you can find a video – even nvidia Shield isn’t faster than my app ,and nVidia f.e has a much tighter controll over the hardware. (Honestly, 70ms lag for Gamestream is pretty ugly ).

@Roberto:

I’m sorry, I failed by copy- paste the command – fixed it. If you have questions,you can write me an email ( geierconstantinabc@gmail.com )

Hi Constantin

Great job! I follow the developments of your App closely and I am really impressed. I even bought a Cardboard because of it 🙂 Unfortunately, there are still some issues on my “Wiko Fever 4g” (seems to be dropping some of the data, the video is always corrupted). When I have the time I’ll dig a bit deeper into that and see if I can get it to work. If I have a fix, I’ll send you my changes.

Hi Constantine. You are sow awesome! Your app is very great! Unfortunately I broke my Rpi camera lens, but for my pc works great!

How’s the OSD going on the app Constantin? It seems like it’s all there, though just no way to get data to it?

Another idea which would be great for it would be head-tracking output from the Android! You could get it to send the position data as values on the Mavlink! Would be quite awesome!

Finally one thing that would help a lot is distortion correction, and having the video in a sphere as opposed to a plane 🙂

I think you’ve done really great work constantin!!

Anyone managed to build an Android image that one can use to just flash the device and use it ?

Bortek – I have been playing with building AOSP from scratch and finally just gave up and loaded NetHunter

https://github.com/offensive-security/kali-nethunter

Constantin – Thanks for the work on your app! I was just using basic Gstreamer stuff on android to get a sidebyside going. Taking it to openGL opens up a lot of options from an overlay perspective.

I am using a RPi3 + Navio2 board with Ardupilot as my flight stack, so my flight controller and my video processor are the same hardware. Alfas for RX and TX and Nexus 6P with the daydream headset as my hardware on the ground.

I think if I get things right I should be able to add controls and a data link through the serial 3DR radio and something like MAVProxy.

Befinitiv – are you still actively working on this project?

Thanks to everyone, this blog has been enormously helpful.

Dear befinitiv, please recheck the “Trackback & Pingback” block for improper links. Or Hacker Planet site was hacked by bad boys – that is why I could not see the original content of it.