FPV in 4K

This post describes how to achieve 4K resolution with a wifibroadcast-based FPV system using H265 encoding. The improved encoding can also be used to get better image quality or reduce bitrate at lower resolutions.

Note: This writeup will not give you a complete image that you can burn on your SD card. It shows a way that makes 4K FPV possible. And due to the image sensor used, it is not traditional 16:9 but instead 4:3 format.

Most of the readers of my blog will know the wifibroadcast system that I developed four years ago. Unfortunately, due to lack of time I was not able to continue working on wifibroadcast anymore. But the community came to my rescue: Many many others have taken over the idea and created incredible images based on the original wifibroadcast. They extended it with so much functionality, broader hardware support, etc. I was really happy to see what they made out of the project.

So as a small disclaimer: This post does not mean that I will pick back up development on the wifibroadcast project. It is just a small hint to the community about new potentials for the wifibroadcast project. These potentials follow of course the wifibroadcast philosophy: To use cheap readily available parts and to make something cool out of them 🙂

The choice of single board computer for wifibroadcast

Why did I choose Raspberry PI for wifibroadcast? Obviously, due to it’s low price and popularity? Wrong. This was just an extra bonus in the Wifibroadcast soup. The main motivation was that it had a well supported video encoder on board. Video encoding is one of the key ingredients that is required for wifibroadcast. It is not important whether you use MIPS or ARM architecture, USB or Raspberry camera, Board A or Board B… the only things you really need is a video encoder and a USB 2.0 interface.

And in terms of video encoding, the stakes are rather high in case of Raspberry PI. The encoder is so well integrated, it just works out of the box. It has gstreamer support if you want to build your own video pipeline and if not, the camera app directly delivers compressed video data. It cannot get any easier.

Since the original wifibroadcast I was always on the lookout for another SBC with a well supported video encoder. Always with the hope in mind to lower the latency. Unfortunately, my latest find does not seem to deliver improvements in that area. Instead, it improves in terms of image resolution and coding efficiency by using H265 instead of H264 (Raspberry PI does not support H265 and also cannot go beyond 1920×1080 resolution).

NVIDIA Jetson Nano

Some weeks ago, NVIDIA announced the Jetson Nano, a board targeted towards makers with a rather low price tag of $99. The following features caught my attention:

- Raspberry PI camera connector

- Well supported h264 and h265 support (through gstreamer)

I could not resist and bought one of these boards. Spoiler: It does not seem to improve in terms of latency but definitely in terms of resolution and bit-rate.

Other nice features of this board is the improved processor (A57 quadcore compared to RPI A52), improved RAM (4GB DDR4 vs 1GB DDR2), improved GPU (128 core vs 4?).

Camera modes

The Jetson Nano supports only the Raspberry camera V2. With this, it provides the following (for Wifibroadcast most useful) camera modes

- 4K (3280 x 2464) at 21fps

- FHD (1920 x 1080) at 30fps

- HD (1280 x 720) at 120fps

In theory, it should also support other frame rates but I could not manage to change this setting. But I did not really try hard so it is probably my fault.

Latency measurements

I did some very quick latency measurements. The setup that I used was my Laptop with gstreamer and a wired Ethernet connection between Laptop and Jetson. The reason for a wired connection instead of Wifibroadcast is that my flat is heavily polluted on all wifi bands. If I had used Wifibroadcast transmission, the latency from the encoding and the transmission in the polluted environment would have mixed together. Using an Ethernet connection was a simple way of separating the encoding latency since I was only interested in that.

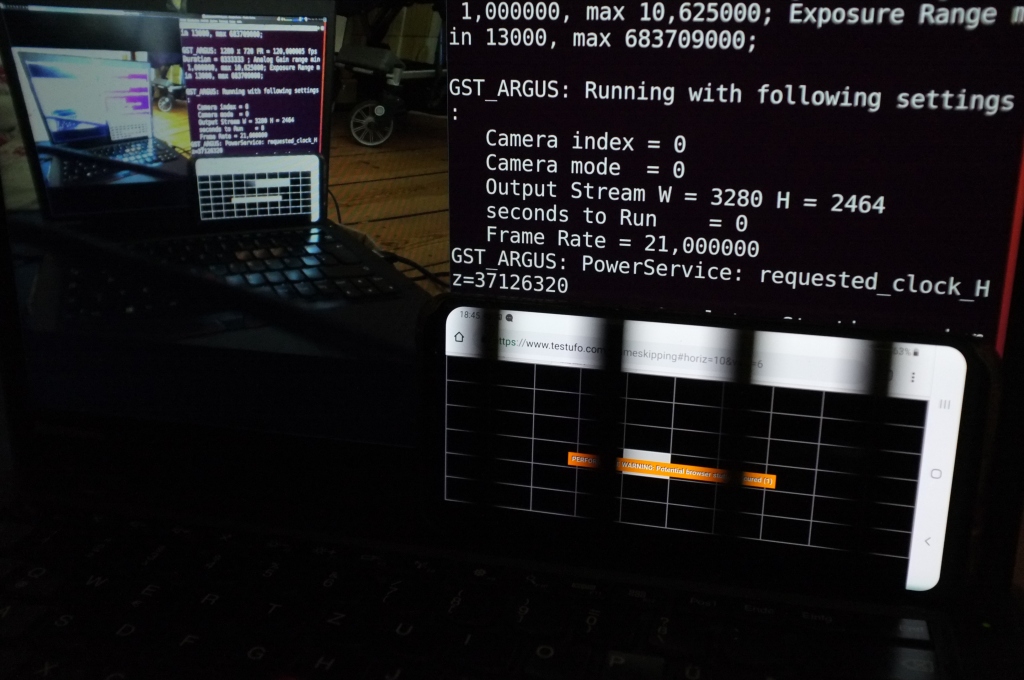

The measurement setup was rather crude, I used the TestUFO page and took a picture of the setup to measure the time distance like shown here (right: “live view”, left: “transmitted view”):

This setup introduced of course a couple of variables. Screen update latency and decoding latency of my laptop, update speed of my smartphone displaying the TestUFO page. Not quite ideal… but good enough for a first impression.

With this setup, I determined the following latency (only one measurement point per setting):

H265:

- 4K 21fps: 210ms

- FHD 30fps: 150ms

- HD 120fps: 140ms

H264:

- 4K 21fps: 170ms

- FHD 30fps: 160ms

- HD 120fps: 160ms

Interpretation of latencies

One thing to note is that the CPU of my laptop was smoking quite a bit while decoding H265 or the high frame rates. So in case of 4K H265 I would definitively expect improvements if you would use a proper decoding device (like a smartphone or even a second “decoding Jetson”).

Otherwise, I would say that the latencies are definitively usable for FPV scenarios. I think it would be worth to invest more work into the Jetson Nano for Wifibroadcast.

Command lines

Receiver: gst-launch-1.0 -v tcpserversrc host=192.168.2.1 port=5000 ! h265parse ! avdec_h265 ! xvimagesink sync=false

Transmitter: gst-launch-1.0 nvarguscamerasrc ! ‘video/x-raw(memory:NVMM),width=3280, height=2464, framerate=21/1, format=NV12’ ! omxh265enc bitrate=8000000 iframeinterval=40 ! video/x-h265,stream-format=byte-stream ! tcpclientsink host=192.168.2.1 port=5000

Some remarks: To use H264, all occurrences of H265 need to be replaced in the command lines. To change the resolution, the parameter of the TX command needs to be adapted. Lowering resolution results in a cropped image, meaning a smaller field of view.

Quality comparison

One important question is of course: What do you gain from 4K+H265? I extracted some stills out of the video streams to compare things:

H264

H265

The difference in quality gets quite clear if you switch back and forth between h264 and h265 (note: only 256 colors due to gif format):

The bad results from H264 are expected: Even at high bitrates the format is not really intended for 4K resolution. And Wifibroadcast usually runs at even lower than usual bitrates (all recordings in this post use Wifibroadcast-typical 8mbit/s)

Sample recordings

Here you can find some raw sample recordings. They have been created with the following command line (resolution and codec changed accordingly):

gst-launch-1.0 nvarguscamerasrc ! ‘video/x-raw(memory:NVMM),width=3280, height=2464, framerate=21/1, format=NV12’ ! omxh265enc bitrate=8000000 iframeinterval=40 ! video/x-h265,stream-format=byte-stream ! filesink location=/tmp/4k.h265

https://www.file-upload.net/download-13588131/4k_h264_static.mp4.html

https://www.file-upload.net/download-13588129/fhd_h264_static.mp4.html

https://www.file-upload.net/download-13588130/hd_h264_static.mp4.htm

https://www.file-upload.net/download-13588134/hd_h265_static.mp4.html

https://www.file-upload.net/download-13588133/fhd_h265_static.mp4.html

https://www.file-upload.net/download-13588135/4k_h265_static.mp4.html

https://www.file-upload.net/download-13588139/4k_h265_moving.mp4.html

https://www.file-upload.net/download-13588141/4k_h264_moving.mp4.html

(Please excuse the use of such shady file hosting site… )

Summary

In summary I can say: Yes, the Jetson Nano is a useful SBC for a Wifibroadcast transmitter. It also is very likely a good candidate for a Wifibroadcast receiver. Plenty of processor power plus a (compared to Raspberry) capable GPU to draw even the fanciest OSD.

The weight and size might be a bit too high for certain applications. But there are options here as well. The heat sink (being the most heavy part of the system) could easily be replaced with something lighter (since you have lots of airflow on a drone). Also, the Nano is in fact a System-on-Module. Meaning: The whole system is contained on a small PCB the size of a Raspberry PI Nano. The mainboard of the Nano is mostly just a breakout board for connectors. A custom board or even a soldering artwork of wires might turn the Nano into a very compact, very powerful system.

Also, the 4K resolution + H265 seems to improve Wifibroadcast quality quite a bit. Together with a suitable display device (high resolution smart phone for example) this has the potential to boost the video quality to a completely new level.

I really hope that the hint from this post will be picked up by somebody from the community. My gut feeling says that there is definitively potential in this platform and that my crude latency measurements of this post can be improved quite a bit (by using a different receiving unit and/or parameter tuning).

If someone has something that he would like to share, please add a link to the comments. I will then happily integrate this link into this post.

🙂

Thanx.

I will send you an email tomorrow

What is the best results you guys have got with a webcam/action camera. And could you guys send/link me the image and codes to execute.

@Befinitiv,

Thank you for everything you have done initially to get this project going. I am Justin (HtcOhio) from RCgroups Open.HD which is a spin-off of wifibroadcast.

Link:

https://github.com/HD-Fpv

We also have a telegram group that makes it so much easier to quickly communicate with people from around the world. after having it open for 2 weeks we already have nearly 45 followers. So there’s significant interest in the overall system. (Pi based).

https://t.me/joinchat/LiHVzxU1izb3sif0r8TH8Q

Believe it or not we are still actively developing additional features and functionality. if there’s any possibility you’d be able to lend us a hand with some of the more complicated parts even if it’s just some guidance that would be greatly appreciated.

If not, we would certainly value any input or feedback you might have.

Cheers,

Justin

I was wondering if the Android TV box like with Amlogic S905X2 can be used. It seems that those devices are more powerful and cheap. Thank you again.

quick test on the same board i got 140ms glass to glass latency on the highest resolution of Pi Cam (3280 x 2464) with 21 fps without much tuning so far.

please take a note of the new element nvv4l2h265enc. and i beleive yes, you need a good receiver CPU (on windows at least) or get a phone with HW decoder.

transmitter:

gst-launch-1.0 -e nvarguscamerasrc maxperf=1 ! ‘video/x-raw(memory:NVMM), width=(int)3280, height=(int)2464, format=(string)NV12, framerate=(fraction)21/1’ ! nvv4l2h265enc maxperf-enable=1 bitrate=8000000 iframeinterval=40 control-rate=0 ! h265parse ! rtph265pay config-interval=1 ! udpsink host=192.168.1.47 port=5000 sync=false async=false

receiver:

gst-launch-1.0 -vvv udpsrc port=5000 ! application/x-rtp,encoding-name=H265,payload=96 ! rtph265depay ! h265parse ! queue ! avdec_h265 ! autovideosink sync=false async=false -e

the board seems promising and with a lot of cameras out there with FOV of 140 and more, this is a perfect match to start developing for fpv

Thank you, great, what do you think about raspberry pi 4 ? 😀👍

Unfortunately, the RPI4 does not support h265 encoding. Without this new codec 4k is quite impractical (because with h264 you would need a very high bitrate at 4k)

Very interesting.

I haven’t used WifiBroadcast yet, but I’ve been experimenting with gstreamer on a jetson for low latency streaming too. About the decoding overhead on the receiver. I had the same problem and managed to enable hardware decoding on windows using following pipeline:

receiver (windows latptop, gstreamer-1.18.4):

gst-launch-1.0.exe udpsrc port=5000 ! queue ! application/x-rtp,media=video,encoding-name=H264,payload=96 ! rtph264depay ! queue ! h264parse ! d3d11h264dec ! autovideosink

transmitter (jetson nano):

gst-launch-1.0 v4l2src device=/dev/video0 ! ‘image/jpeg, width=1280, height=720, framerate=30/1, format=MJPG’ ! jpegdec ! nvvidconv ! ‘video/x-raw(memory:NVMM), format=NV12’ ! omxh264enc iframeinterval=0 ! ‘video/x-h264, format=NV12, stream-format=byte-stream’ ! h264parse ! rtph264pay pt=96 config-interval=1 ! udpsink host=10.66.66.2 port=5000

Well it’s using H264 over RTP in this example but the d3d11 decoder also exists for H265 on windows, so it should work the same way. The taskermanager confirmed that gstreamer was using the GPU to decode the videostream with this pipeline

Hello,

Thanks for the amazing work. Do you have a suggestion for the rtl8812au driver on Jetson nano?

Best

Hey,

Oh well, I imagine doing kernel work on the Jetson is really fun, judging from my experience with NVIDIA products. Unfortunately, I cannot help you in that regard. In this post I tested the camera+ISP+encoder but I did not went any further than that (since these are the critical parts of a SBC when it comes to latency) and thus did not port any driver patches to the Jetson. Did you have a look at the Open.HD guys? At some point they planned to add Jetson support, maybe they did that already?